Introduction.

Developing “Mia,” a talking cat-shaped robot that speaks dialects.

Currently, he is engaged in SNS marketing (YouTube Short, TikTok, InstaReel videos) with a focus on short videos.

- YouTube: https: //www.youtube.com/@mia-cat-dialect

- TikTok: tiktok .com/@mia_cat_robot

- Instagram: instagram .com/miacat0610

This article describes how to manually create a short video using the CapCut video creation software.

In this issue, we will examine the Luma AI Dream Machine, a free video-generation AI, to see if short video generation is practical.

What is a Dream Machine?

Dream Machine” is a video-generating AI service announced by AI startup Luma Labs on June 12 (local time). The service generates a 5-second video from a single photo and a prompt that indicates the content of the video.

The generation speed is set at 1 second per frame, allowing 120 frames (5 seconds at 24 fps) to be generated in 2 minutes.

As for high-quality video generation AI, OpenAI has announced “Sora,” but Sora is not yet available to the public and can only be used by a few people. Dream Machine was announced prior to Sora.

Incidentally, “Dream Machine” has a limit of 30 times in a month and 10 times in a day that can be generated with the free quota. When the free quota is used up, you will be guided to the following paid plan.

Up to 120 times for $29.99, up to 400 times for $99.99, up to 2000 times for $499.99 All plans are priced at approximately $0.25 per transaction

Incidentally, the terms of use state the following, so if you actually want to use the generated short videos on social networking sites, you must register for a fee.

If you wish to use the output for commercial purposes, you must subscribe to a paid subscription.

Try video AI generation in several ways

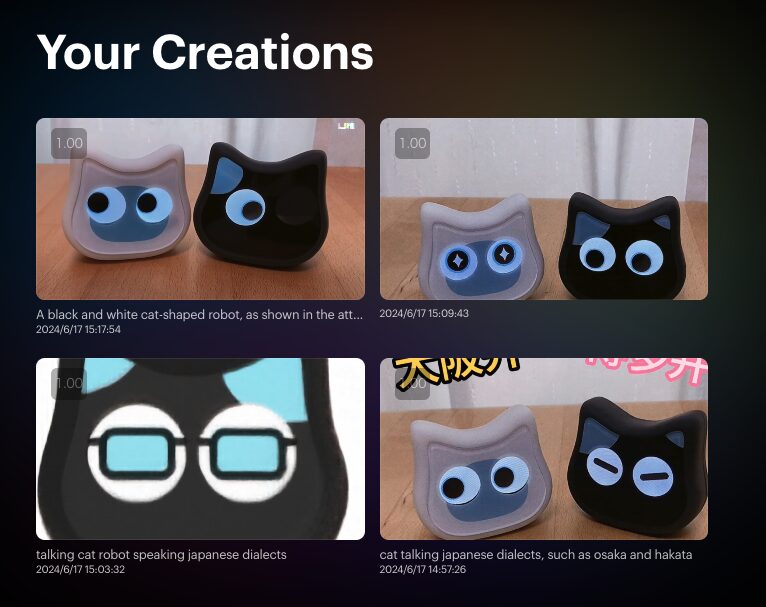

We tried 4 patterns this time.

Here is the thumbnail of the resulting short video.

By the way, the prompt is in English because it seems to not work well in Japanese in many cases.

It takes about 2-3 minutes to generate the file, depending on the item.

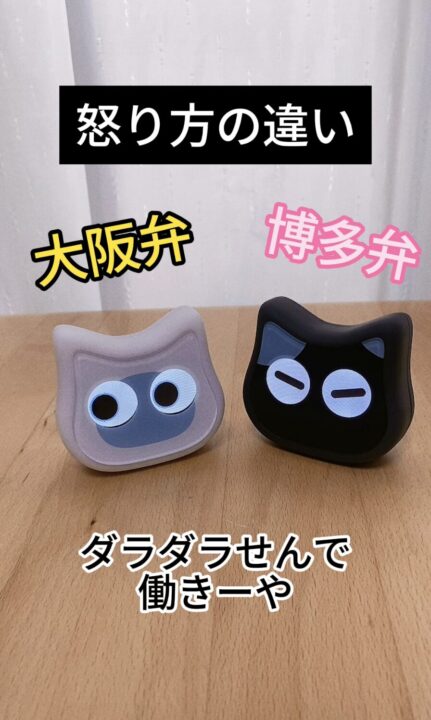

Pattern 1) Still image with message

Prompt

cat talking Japanese dialects, such as Osaka and Hakata

Input image

Output Video

The ticker is disrupted in the middle and cat-like icons are included, which is interesting, but not practical.

Prompt

talking cat robot speaking Japanese dialects

Input image

Output Video

It’s very funny, but… it’s no longer a service. It’s amazing how they go beyond what you can imagine.

Image only

Since you said that the video generation from images is beautiful, this time we left out the prompt text and entered only the image.

Input image

Output Video

Indeed, although there is movement, this is almost the same as for a still image, which is tough for this application.

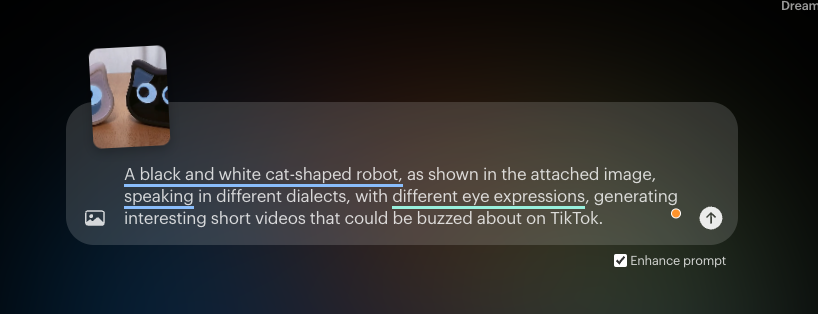

Image + detailed text prompt

Finally, try the following prompts.

First, translate what you created in Japanese into English at DeepL

A black and white cat-shaped robot, as shown in the attached image, speaking in different dialects, with different eye expressions, generating A black and white cat-shaped robot, as shown in the attached image, speaking in different dialects, with different eye expressions, generating interesting short videos that could be buzzed about on TikTok.

Then, enter it into the Dream Machine prompt with the following image

When I tried the above prompt, I got an error message “Unable to generate video”.

Is there something wrong with the “generating interesting short videos that could be buzzed about on TikTok” part? I tried omitting the relevant part, and it was successfully generated.

Generated Video

I personally like it because it looks like it is moving lively, but the black Mia’s eyes are crushed in the middle of the picture, and it is not in its original form.

Interesting, but posting this video may destroy the worldview of the service.

It made me think that it would be good if we could make the real thing move realistically like this (although the difficulty level is extremely high).

summary

This time, it is a short video generation with the real thing already in place, so it does not seem to be practical at this stage. However, there may be a problem with my prompt input.

If it is possible to change the video only to the background (e.g., we are in Antarctica, or in the jungle, etc.) with the actual object in place, it would be very practical in this case.

Perhaps we will be able to do something like this in the near future, and I look forward to seeing what the future holds.