Introduction.

Developing “Mia,” a talking cat-shaped robot that speaks dialects.

After releasing the beta version, several users have actually used it.

I’d like to see a “no talking mode” on the frequency of talking.”

The implementation of this function is described in this issue because we received a request for it.

We asked for more details,

“I am sometimes startled when people suddenly start talking during an online meeting, and sometimes I want to concentrate on my studies or work.”

He said.

What to do with UIUX? →Display toggle icon on the home screen

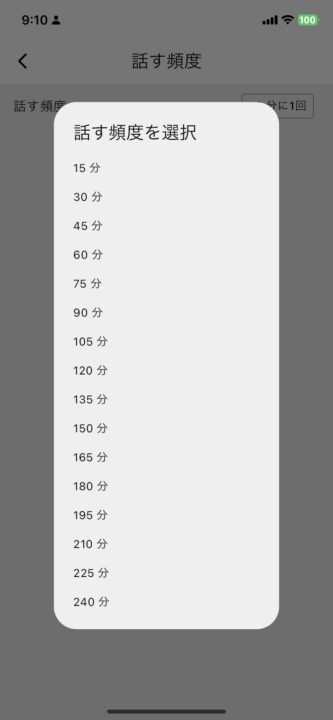

Currently, the setting of the frequency of Mia’s speaking can be set in the “Home screen → Settings tab → Frequency of speaking” section.

Initially, I was thinking of adding “don’t speak” as one of the pull-down selections here or making it a toggle notation for the switch, with the wording “switch to non-speaking mode” at the bottom of this screen.

However, from the engineers we are developing with.

I personally think it would be better if the frequency setting could be turned on/off in an easily accessible place on the home screen…? I personally think it would be better to be able to turn it on and off in a clear place on the home screen. Currently, I have the opportunity to turn it off once every few days, and it may become the most frequently used function. With Alexa, there was a mute button about next to the volume.”

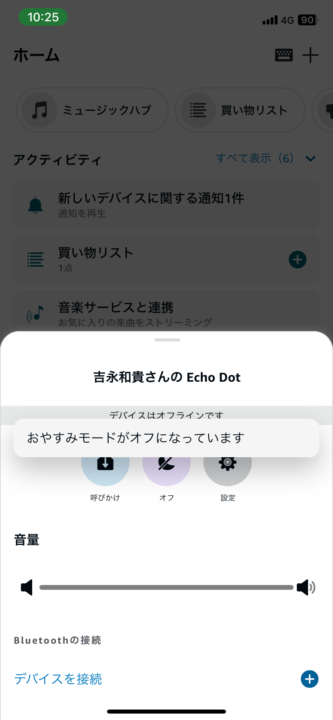

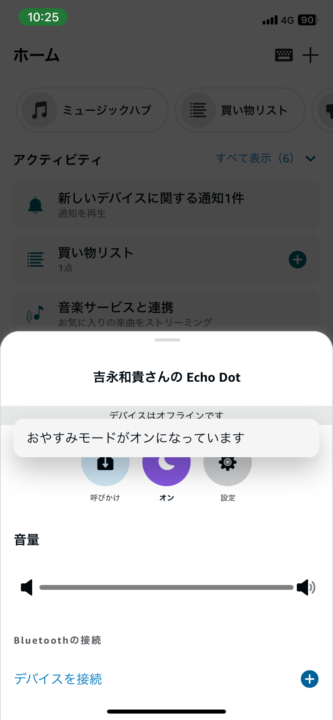

I got a comment that I had to try it out, so I looked at Alexa, and sure enough, the mute (or in this case, good night mode) was displayed on the home screen with a toggle icon.

So, referring to Alexa, I would like to set up an item called “mute function” under the volume slider, which can be toggled on and off with a switch. The default is OFF.

Current app home screen UI

Post-renovation UI

Sequence of mute function modification

What to do with app-server-device interactions

Since many type conversions are currently occurring between application → server → device, summarize the implementation policy with a focus on type conversions.

Application (Dart)

- Conversion from Dart object to ProtoBuf message

- The state of the mute switch is changed in the app’s UI and reflected in the Dart object.

- Conversion of Dart object to ProtoBuf message.

- Send ProtoBuf messages to the server via API communication.

- Converted ProtoBuf messages are sent to the server via API.

Server (Go)

- ProtoBuf message to Go structure

- Convert the received ProtoBuf message into a Go structure.

- Database column value update

- Update

is_mutedcolumn in the database using the Go structure.

- Update

- Go structure to ProtoBuf message

- Convert updated Go structure to ProtoBuf message.

- ProtoBuf message to JSON format

- Converted ProtoBuf messages to JSON format.

- Update device shadow desired

- Data in JSON format is reflected in the device shadow DESIRED.

- Sent to the device via MQTT communication

- Sends updated information on the device shadow to the device via MQTT communication.

Device (ESP32)

- Convert JSON data received by MQTT to C++ structure

- Received JSON data is parsed and converted to C++ structures.

- Logic execution

- Added logic to play audio and facial expressions only when

is_mutedisfalse.

- Added logic to play audio and facial expressions only when

- Update device shadow reported (JSON format)

- Logic execution results are reflected in the device shadow reported.

App (Flutter)

Add mute switch and functionality (UI fixes)

Add mute switch

- Add a switch to the app’s home screen to enable the mute function.

- The

valueproperty is passed the current mute state(isMuted) and theonChangedproperty is set to the callback function(_onMuteChanged) that will be called when the switch is toggled.

// ミュートスイッチをUIに追加

Switch(

value: isMuted,

onChanged: _onMuteChanged,

),Mute status update

- Add a function to send mute status to the server.

- Update the UI by calling

setStateeach time the switch state changes; useref.read(userProvider.notifier)to get theuserNotifierand call theupdateUsermethod to update the user’s mute state.

void _onMuteChanged(bool isMuted) {

setState(() {

final userNotifier = ref.read(userProvider.notifier);

userNotifier.updateUser(User(isMuted: isMuted));

});

}Add is_muted to Dart class

- Add a new field

isMutedto theUserclass. This field holds the state of the mute function. - Initialize the

isMutedfield in the constructor of theUserclass. fromJson andtoJsonmethods to includeisMutedfields in the conversion to and from JSON format.

lib/api/user.dart

class User {

// 既存のフィールド...

final bool? isMuted; // ミュート機能の追加フィールド

User({

// 既存のフィールドの初期化...

this.isMuted,

});

factory User.fromJson(Map json) => _$UserFromJson(json);

Map toJson() => _$UserToJson(this);

}Add is_muted to protobuf messages

- Added new field

is_mutedtoUsermessages. This field corresponds to the Dart class and holds the state of the mute function. - Use field number 21. Do not duplicate numbers with other fields.

photos/user.proto

message User {

// 既存のフィールド...

bool is_muted = 21; // ミュート機能の追加フィールド

}Execute the following protoc command in the root directory of the project to generate gRPC client code from the proto file. Based on the generated gRPC client code, Protobuf messages are serialized and deserialized into a Dart structure.

protoc --dart_out=grpc:lib/grpc -Iprotos protos/user.protoServer (Go)

DB update: add is_muted column to the users table

Creating a Migration File

Create a new migration file and add an is_muted column to the users table.

-- 20240629000000_add_is_muted_to_users.up.sql

ALTER TABLE users ADD COLUMN is_muted BOOLEAN DEFAULT FALSE;Migration Execution

migrate -path ./migrations -database ${MYSQL_MIGRATE_DSN} upAdded is_muted field to User structure

user.go

package clocky_be

import (

"time"

"github.com/EarEEG-dev/clocky_be/types"

)

type User struct {

// 既存のフィールド...

IsMuted types.Null[bool] `db:"is_muted" json:"is_muted"`

}

type UserUpdate struct {

// 既存のフィールド...

IsMuted types.Null[bool] `db:"is_muted" json:"is_muted"`

}Correction of ToDeviceConfig function

The ToDeviceConfig function is used to convert the data in the User structure into DeviceConfig messages in Protobuf, which are further converted into JSON format and reflected in the device shadow.

device_config.go

package clocky_be

import (

"github.com/EarEEG-dev/clocky_be/pb"

"github.com/EarEEG-dev/clocky_be/types"

)

func ToDeviceConfig(u *User) pb.DeviceConfig {

return pb.DeviceConfig{

// 既存のフィールド...

IsMuted: u.IsMuted.Ptr(), // ミュート機能の追加フィールド

}

}

Device shadow update

handle_update_user.go

package clocky_be

func (h *UserHandler) HandleUpdateUser(c echo.Context) error {

uid := c.Get("uid").(string)

var params UserUpdate

if err := c.Bind(¶ms); err != nil {

return err

}

// 更新後のユーザー情報を取得する

updatedUser, err := GetUser(h.db, uid)

if err != nil {

return err

}

// デバイスIDが存在するとき

if updatedUser.DeviceID.Valid {

dc := ToDeviceConfig(updatedUser)

desired := &pb.PublishShadowDesired{

State: &pb.ShadowDesiredState{

Desired: &pb.ShadowDesired{

Config: &dc,

},

},

}

// DeviceShadowを反映

if err = h.sm.UpdateShadow(c.Request().Context(), updatedUser.DeviceID.V, desired); err != nil {

c.Logger().Errorf("failed to update device shadow: %v", err)

return echo.NewHTTPError(http.StatusInternalServerError, "failed to update device shadow")

}

}

return c.JSON(http.StatusOK, updatedUser)

}

Now, when the mute function is turned on from the app, the status will be sent to the server, reflected in the device shadow, and communicated to the ESP32 device.

Device (ESP32, C++)

Receive and parse JSON data

Parse JSON data received via MQTT and convert it into a C++ DeviceConfig structure.

device_config.cpp

#include "device_config.h"

std::optional readDeviceConfig(const JsonVariant &json, const char *key) {

if (json.containsKey(key)) {

DeviceConfig state;

state.is_muted = read(json[key], "is_muted");

return state;

}

return std::nullopt;

}

void writeDeviceConfig(const DeviceConfig &config, JsonObject &obj) {

writeOpt(obj, "is_muted", config.is_muted);

}

Logic control of facial expression playback

- In the

loopfunction of main.cpp, use theis_mutedfield of theDeviceConfigstructure to play the voice and facial expressions only whenis_mutedisfalse.

main.cpp

void loop() {

if (inSafeMode) {

safeModeLoop();

return;

}

// ネットワーク接続状態の変化を監視

monitorWiFiConnectionChange();

// ネットワーク接続がある場合の処理

if (isWiFiConnected()) {

executeWiFiConnectedRoutines();

}

// ボタン周りの処理

buttonManager.handleButtonPress();

// is_mutedがfalseの場合のみ、音声と表情の再生を行う

auto desiredConfig = SyncShadow::getInstance().getDesiredConfig();

if (!desiredConfig || !desiredConfig->is_muted.value_or(false)) {

ExpressionService::getInstance().render();

} else {

Serial.println("is muted function called"); // デバッグ用

}

delay(10);

}Device shadow reported reflects the result of is_muted field change.

When the SyncShadow::updateConfig method is called, the changed state of the is_muted field is reflected in the device shadow. This synchronizes the is_muted state between the server and the device.

main.cpp

// デバイス設定の変更を適用し、デバイスシャドウに通知する

void applyAndReportConfigUpdates() {

Logger &logger = Logger::getInstance();

SyncShadow &syncShadow = SyncShadow::getInstance();

auto desiredConfig = syncShadow.getDesiredConfig();

auto reportedConfig = syncShadow.getReportedConfig();

auto optConfigDiff = getDeviceConfigDiff(desiredConfig, reportedConfig);

// 変更がない場合はスキップ

if (!optConfigDiff) {

return;

}

logger.debug("device config changed");

// 変更のあった設定

auto configDiff = optConfigDiff.value();

logger.debug("config diff: " + serializeDeviceConfig(configDiff));

// 設定変更のみの場合

if ( configDiff.is_muted.has_value()) {

// デバイス設定をデバイスシャドウに通知

if (auto result = syncShadow.reportConfig(configDiff); !result) {

logger.error(ErrorCode::CONFIG_REPORT_FAILED, "failed to report config: " + result.error());

return;

}

return;

}

}With the above steps, the mute function can be integrated from the app’s UI to the device’s expression playback.

operation check

The mute function is off in the app by default

Log when the app’s mute function is turned on.

server

clocky_api_local | 2024/06/30 12:04:16 sent message to user: 1

clocky_api_local | 2024/06/30 12:04:26 sendShadow: userId=1, reported=config:{talk_frequency:15 weather_announcement_time:{hour:8} birth_date:{year:1988 month:10 day:1} phrase_type:"standard" talk_start_time:{hour:7} talk_end_time:{hour:22} volume:50 firmware_version:"1.0.3" is_muted:true} wakeup_time:25 downloading:{} connected:true last_action_time:1719748976 last_periodic_talk_time:1719748971Confirm that the value of the is_muted column in the database’s User table has changed from 0 to 1.

Confirm that desired→is_muted in device shadow has been changed from false to true.

device

21:04:08.670 > MQTTPubSubClient::onMessage: $aws/things/device_id/shadow/update/delta {"version":23,"timestamp":1719749048,"state":{"config":{"is_muted":true}},"metadata":{"config":{"is_muted":{"timestamp":1719749048}}}}

21:13:57.136 > is muted function calledConfirm that reported→is_muted in device shadow has been changed from false to true.

We then confirmed that when the mute mode was released, the eyes would start moving again and the audio would play at the user-set speaking frequency interval.

Now, when you want to concentrate, such as during an online meeting or while studying, you can stop Mia with a single tap from the app.