Introduction.

Developing “Mia,” a talking cat-shaped robot that speaks various dialects.

In a previous article here, we described the implementation of a voice recording and playback function using flutter_sound.

This time, as a continuation, we would like to implement the part where recorded audio files are uploaded from the application to the server (AWS S3) via API communication.

API Design

Endpoint: /api/upload_voice_with_schedule

Method: POST

Request body

- phrase: Phrase text (string)

- is_private: phrase public setting (bool)

- time: playback time (optional, types.HourMinute)

- days: list of playback days (optional, []string)

- file: audio file (multipart/form-data)

Process Flow

- Receive requests from clients.

- Upload the audio file to S3 and get

voice_path. - Create a record of the phrase in the database.

- If a schedule exists, create a schedule record.

Server side (Go)

Implement the UserPhraseHandler function

Create a new audio file upload handler HandleUploadVoiceWithSchedule function

Context and Dependency Management

- The

UserPhraseHandlerstructure holds the database connection(sqlx.DB) and configuration(Config). This allows API handlers to access the database and configuration.

Audio file processing

- Use

c.FormFile("voice")to obtain the voice file, and open the file withfile.Open(). Then,buf.ReadFrom(src)reads the file data into a byte slice.

Upload to S3

- Generate a unique file name using the current date and time and upload the audio data to S3 using the

UploadStreamToS3function; the S3 bucket name and file key are predefined.

Transaction Initiation and Phrase Creation

- Start a database transaction and

call CreateUserPhrase tocreate a new phrase in the database. Rollback the transaction if it fails.

Update audio file paths

- Associate the S3 path of the uploaded voice file with the phrase in the database. Now update the

voice_pathfield.

Sending API Responses

- Upon successful completion, the new phrase data is returned to the client in JSON format with HTTP status 201 (Created).

user_phrase_handler.go

// 音声ファイルをアプリからアップロード

func (h *UserPhraseHandler) HandleUploadVoiceWithSchedule(c echo.Context) error {

phraseText := c.FormValue("phrase")

if phraseText == "" {

return echo.NewHTTPError(http.StatusBadRequest, "Phrase text is required")

}

uid := c.Get("uid").(string)

user, err := GetUser(h.db, uid)

if err != nil {

return echo.NewHTTPError(http.StatusInternalServerError, "User not found")

}

file, err := c.FormFile("voice")

if err != nil {

return echo.NewHTTPError(http.StatusBadRequest, "Voice file is required")

}

src, err := file.Open()

if err != nil {

return echo.NewHTTPError(http.StatusInternalServerError, "Unable to open file")

}

defer src.Close()

var buf bytes.Buffer

_, err = buf.ReadFrom(src)

if err != nil {

return echo.NewHTTPError(http.StatusInternalServerError, "Failed to read file")

}

audioData := buf.Bytes()

ctx := context.Background()

timestamp := time.Now().Format("20060102-150405")

fileName := fmt.Sprintf("user_upload_%s.mp3", timestamp)

key := fmt.Sprintf("users/%d/user_phrases/%s", user.ID, fileName)

err = UploadStreamToS3(ctx, "your-bucket-name", key, audioData)

if err != nil {

return echo.NewHTTPError(http.StatusInternalServerError, "Failed to upload to S3")

}

tx, err := h.db.Beginx()

if err != nil {

return echo.NewHTTPError(http.StatusInternalServerError, "Failed to start transaction")

}

phrase, err := CreateUserPhrase(tx, user.ID, phraseText, true, true)

if err != nil {

tx.Rollback()

return echo.NewHTTPError(http.StatusInternalServerError, "Failed to create phrase")

}

err = UpdateUserPhraseVoicePath(tx, phrase.ID, user.ID, key)

if err != nil {

tx.Rollback()

return echo.NewHTTPError(http.StatusInternalServerError, "Failed to update voice path")

}

var schedule *PhraseSchedule

// スケジュール情報の保存(存在する場合)

var req struct {

Time *types.HourMinute `json:"time,omitempty"`

Days *[]string `json:"days,omitempty"`

}

if err := c.Bind(&req); err == nil {

if req.Time != nil && req.Days != nil {

schedule, err = CreatePhraseSchedule(tx, user.ID, phrase.ID, *req.Time, *req.Days)

if err != nil {

tx.Rollback()

return echo.NewHTTPError(http.StatusInternalServerError, "Failed to create phrase schedule")

}

}

}

if err := tx.Commit(); err != nil {

return echo.NewHTTPError(http.StatusInternalServerError, "Failed to commit transaction")

}

return c.JSON(http.StatusCreated, map[string]interface{}{

"phrase": phrase,

"schedule": schedule,

})

}

API Endpoint Creation

Define a route that accepts requests for the path /upload_voice_with_schedule using the POST method. Call the HandleUploadVoiceWithSchedule function created earlier.

appGroup.POST("/upload_voice_with_schedule", uph.HandleUploadVoiceWithSchedule)Application side (Flutter)

uploadVoiceWithSchedule function: Send audio files and related information to the server

Usinghttp.

MultipartRequestis used to send data including audio files.http.This class is used to send binary data such as files and other form data at the same time.- In

request.fields, information on phrases and publication settings are set as form data. - Add the audio file (local file path) to the data to be sent with

request.files.add.

api_client.dart

api_client.dart communicates with the server and processes API requests.

Future uploadVoiceWithSchedule(String phrase, String filePath,

bool isPrivate, HourMinute? time, List? days) async {

final url = Uri.parse('$apiUrl/upload_voice_with_schedule');

final headers = await apiHeaders();

final request = http.MultipartRequest('POST', url)

..headers.addAll(headers)

..fields['phrase'] = phrase

..fields['is_private'] = isPrivate.toString()

..fields['recorded'] = 'true'

..files.add(await http.MultipartFile.fromPath('voice', filePath));

if (time != null) {

request.fields['time'] = jsonEncode(time.toJson());

}

if (days != null && days.isNotEmpty) {

request.fields['days'] = jsonEncode(days);

}

final response = await request.send();

if (response.statusCode != 201) {

final responseBody = await response.stream.bytesToString();

throw Exception('Failed to upload voice and phrase: $responseBody');

}

}user_phrase_notifier.dart

The user_phrase_notifier.dart is responsible for managing the application state and ensuring that the user interface reflects the latest data.

Future uploadVoiceWithSchedule(String phrase, String filePath,

bool isPrivate, HourMinute? time, List? days) async {

try {

await apiClient.uploadVoiceWithSchedule(

phrase, filePath, isPrivate, time, days);

await loadUserPhrases();

} catch (e) {

throw Exception('Failed to upload voice and schedule: $e');

}

}Retrieve and send audio file path

Obtain the path to the voice file from “RecordVoiceScreen”, which performs voice recording, and use that file path in “AddPhraseScreen” to send data to the API.

lib/screens/home/record_voice_screen.dart (voice recording playback screen)

First, when the “Done” button is pressed, the audio file path is passed to “AddPhraseScreen”.

void _onComplete() {

Navigator.of(context).pop(_recordedFile?.path);

}lib/screens/home/add_phrase_screen.dart (phrase add screen)

If the user uploads an audio file, the path to that file is stored in _recordedFilePath.

Handling of phrase saving and updating

- If there is an audio file, upload the phrase along with the audio file using the

uploadVoiceWithSchedulemethod. - If there is no audio file, call the

addUserPhraseWithScheduleorupdateUserPhraseWithSchedulemethod to send only the phrase to the server.

// 修正後の _AddPhraseScreenState クラス

class _AddPhraseScreenState extends ConsumerState {

late TextEditingController _phraseController;

String _newPhrase = '';

bool _isPublic = true;

HourMinute? _selectedTime;

List _selectedDays = [];

bool _showOptions = false;

String? _errorText;

final int maxPhraseLength = 100;

String? _recordedFilePath; // 音声ファイルパスを保存

// ... (その他のコードはそのまま)

Future _addOrUpdatePhrase() async {

if ((_selectedTime != null && _selectedDays.isEmpty) ||

(_selectedTime == null && _selectedDays.isNotEmpty)) {

setState(() {

_errorText = '再生時間と再生曜日は両方設定するか、両方未設定にしてください。';

});

return;

}

List englishDays = translateDaysToEnglish(_selectedDays);

final userPhraseNotifier = ref.read(userPhraseProvider.notifier);

try {

if (_recordedFilePath != null) {

// 録音ファイルがある場合

await userPhraseNotifier.uploadVoiceWithSchedule(

_newPhrase,

_recordedFilePath!,

!_isPublic, // isPrivate の設定

_selectedTime,

englishDays,

);

} else {

// 音声ファイルがない場合

if (widget.phraseWithSchedule == null) {

await userPhraseNotifier.addUserPhraseWithSchedule(

_newPhrase, false, !_isPublic, _selectedTime, englishDays);

} else {

await userPhraseNotifier.updateUserPhraseWithSchedule(

widget.phraseWithSchedule!.phrase.id,

_newPhrase,

false,

!_isPublic,

_selectedTime,

englishDays,

);

}

}

Navigator.of(context).pop();

} catch (e) {

setState(() {

_errorText = 'フレーズの保存に失敗しました: $e';

});

}

}

Future _navigateToRecordVoiceScreen() async {

final recordedFilePath = await Navigator.push(

context,

MaterialPageRoute(builder: (context) => RecordVoiceScreen()),

);

if (recordedFilePath != null) {

setState(() {

_recordedFilePath = recordedFilePath;

});

}

}

// ... (その他のコードはそのまま)

}

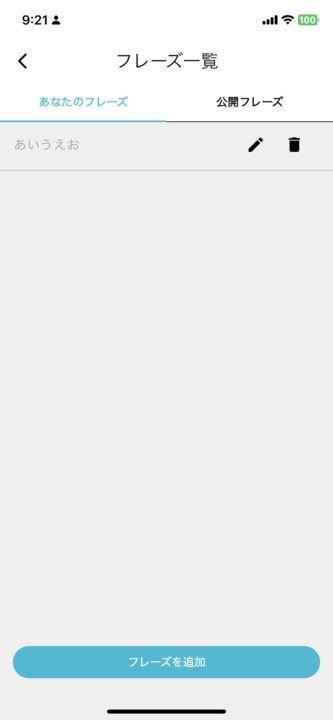

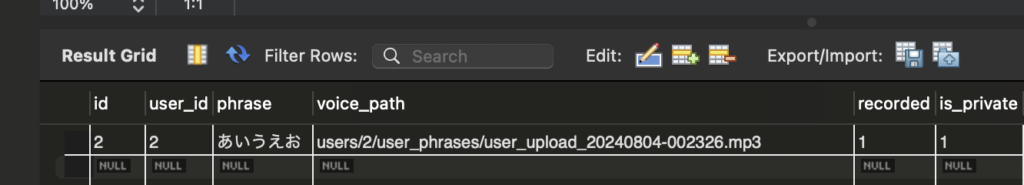

operation check

After recording voice with the application, click the play button.

The following API request is sent on the app side

flutter: URL: http://192.168.XX.XXX:8080/app/upload_voice_with_schedule

flutter: Headers: {Content-Type: application/json, Accept: application/json, Authorization: Bearer XXX}

flutter: Fields: {phrase: あいうえお, is_private: false, recorded: true}

flutter: File Path: /var/mobile/Containers/Data/Application/C66FD757-79C2-444E-9B94-BDEDA6FDCE32/Library/Caches/recording.m4aServer-side log

clocky_api_local | {"time":"2024-08-04T00:23:26.959601213Z","id":"","remote_ip":"192.168.65.1","host":"192.168.10.104:8080","method":"POST","uri":"/app/upload_voice_with_schedule","user_agent":"Dart/3.4 (dart:io)","status":201,"error":"","latency":482183917,"latency_human":"482.183917ms","bytes_in":38182,"bytes_out":239}DB

voice_path stored in

In addition, when the corresponding AWS S3 was accessed and the audio file was downloaded, the recorded audio was successfully played back.

The next step is to develop the part that analyzes the phrase content based on the uploaded audio and assigns emotion IDs. The mapped emotion ID (expression_id) will eventually be displayed during voice playback as Mia’s eyes of joy, anger, sorrow, and pleasure. To be continued in the next issue.