Introduction.

Developing “Mia,” a talking cat-shaped robot that speaks various dialects.

In the previous article, we described the implementation of a function that allows users to input any text they want Mia to speak from the application, and Mia will speak that phrase using text-to-speech synthesis.

In this case, we would like to implement a voice recording and playback function.

Allow users to playback audio recorded by the application, along with a playback schedule (optional). The user can set the public and private settings. Allow users to upload not only voice recordings, but also arbitrary voice files (e.g., cat meows, voices of idols of your favorite activities, etc.).

application

- Use the voice recording package (flutter_sound): https://pub.dev/packages/flutter_sound

- After recording the audio, save it to _filePath (locus storage). Then upload to server

server

- Create an API request to send voice data to the server (HandleUploadVoice)

- Upload voice data to S3 and add voice_path to the record.

In this article, we will describe up to the part where the application records and plays back audio.

Add audio permissions to info.plist and PodFile

The flutter_sound package will be used for the voice recording function.

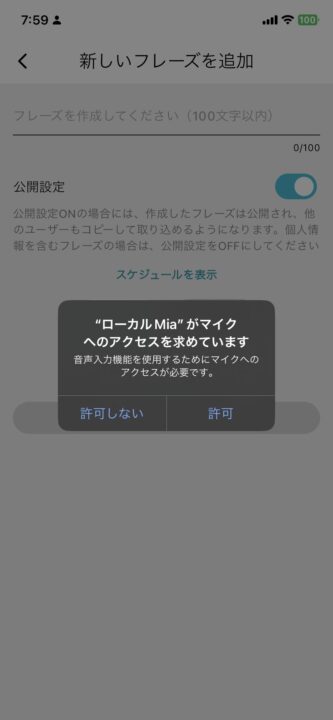

To use the voice recording feature with the flutter_sound package, it is necessary to request permission to access the microphone in XCode.

Add microphone permission key and value to ios/Runner/info.plit

NSMicrophoneUsageDescription

音声入力機能を使用するためにマイクへのアクセスが必要です。I thought this was okay, and when I built it, the following error occurred.

An error message is displayed saying that an attempt was made to grant access to the microphone, but permission was not granted.

[ERROR:flutter/runtime/dart_vm_initializer.cc(41)] Unhandled Exception: Instance of 'RecordingPermissionException'

#0 _AddPhraseScreenState._initializeRecorder (package:clocky_app/screens/home/add_phrase_screen.dart:119:7)

Research shows that, in addition, PERMISSION_MICROPHONE=1 needs to be added to the PodFile.

ios/Podfile

post_install do |installer|

installer.pods_project.targets.each do |target|

# Start of the permission_handler configuration

target.build_configurations.each do |config|

# Preprocessor definitions can be found in: https://github.com/Baseflow/flutter-permission-handler/blob/master/permission_handler_apple/ios/Classes/PermissionHandlerEnums.h

config.build_settings['GCC_PREPROCESSOR_DEFINITIONS'] ||= [

'$(inherited)',

## dart: PermissionGroup.microphone

'PERMISSION_MICROPHONE=1',

]

end

end

endAfter setting and building up to this point, the microphone permission screen appeared when trying to display the voice recording screen.

Implementation of voice recording and playback function

We will implement it by referring to the example in flutter_sound’s simple_recorder.dart.

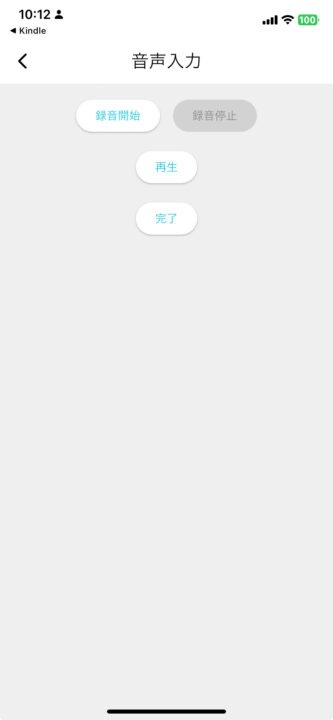

Here is the actual voice recording and playback function.

Start Recording (_startRecording ) and Stop Recording (_stopRecording)

- When recording is started, the audio data is saved in the specified file (e.g.

recording.m4a). - When recording is stopped, recording is terminated and the file path is output to the console.

Additional import of audio_session and flutter_sound_platform_interface

audio_session: This package is used to manage audio playback and recording settings. For example, to configure settings to prevent other sounds from playing while recording.flutter_sound_platform_interface: interface used in the backend of theflutter_soundlibrary. Supports audio recording and playback functions.

AudioSession configuration section

This part of the program is setting up the voice session. Specifically.

AVAudioSessionCategory.playAndRecord: a setting that allows both recording and playback.allowBluetoothanddefaultToSpeaker: Options for using a Bluetooth headset or the device’s speaker to record audio.avAudioSessionMode.spokenAudio: set audio mode for conversation. For when you want to record the audio of a conversation.androidAudioAttributes: set audio attributes on Android devices

import 'dart:io';

import 'package:audio_session/audio_session.dart';

import 'package:flutter/material.dart';

import 'package:flutter_sound/flutter_sound.dart';

import 'package:flutter_sound_platform_interface/flutter_sound_recorder_platform_interface.dart';

import 'package:path_provider/path_provider.dart';

import 'package:permission_handler/permission_handler.dart';

class RecordVoiceScreen extends StatefulWidget {

@override

_RecordVoiceScreenState createState() => _RecordVoiceScreenState();

}

class _RecordVoiceScreenState extends State {

FlutterSoundRecorder? _recorder;

FlutterSoundPlayer? _player;

bool _isRecording = false;

bool _isPlaying = false;

bool _recorderInitialized = false;

bool _playerInitialized = false;

File? _recordedFile;

String? _errorText;

final Codec _codec = Codec.aacMP4;

@override

void initState() {

super.initState();

_initializeRecorder();

_initializePlayer();

}

@override

void dispose() {

_recorder?.closeRecorder();

_player?.closePlayer();

super.dispose();

}

Future _initializeRecorder() async {

_recorder = FlutterSoundRecorder();

var status = await Permission.microphone.request();

if (status != PermissionStatus.granted) {

setState(() {

_errorText = "マイクの使用許可がありません。";

});

throw RecordingPermissionException("Microphone permission not granted");

}

try {

await _recorder!.openRecorder();

final session = await AudioSession.instance;

await session.configure(AudioSessionConfiguration(

avAudioSessionCategory: AVAudioSessionCategory.playAndRecord,

avAudioSessionCategoryOptions:

AVAudioSessionCategoryOptions.allowBluetooth |

AVAudioSessionCategoryOptions.defaultToSpeaker,

avAudioSessionMode: AVAudioSessionMode.spokenAudio,

avAudioSessionRouteSharingPolicy:

AVAudioSessionRouteSharingPolicy.defaultPolicy,

avAudioSessionSetActiveOptions: AVAudioSessionSetActiveOptions.none,

androidAudioAttributes: const AndroidAudioAttributes(

contentType: AndroidAudioContentType.speech,

flags: AndroidAudioFlags.none,

usage: AndroidAudioUsage.voiceCommunication,

),

androidAudioFocusGainType: AndroidAudioFocusGainType.gain,

androidWillPauseWhenDucked: true,

));

setState(() {

_recorderInitialized = true;

});

} catch (e) {

setState(() {

_errorText = "レコーダーの初期化に失敗しました: $e";

});

}

}

Future _initializePlayer() async {

_player = FlutterSoundPlayer();

try {

await _player!.openPlayer();

setState(() {

_playerInitialized = true;

});

} catch (e) {

setState(() {

_errorText = "プレイヤーの初期化に失敗しました: $e";

});

}

}

Future _startRecording() async {

if (!_recorderInitialized) return;

final directory = await getTemporaryDirectory();

_recordedFile = File('${directory.path}/recording.m4a');

await _recorder!.startRecorder(

toFile: _recordedFile!.path,

codec: _codec,

audioSource: AudioSource.microphone,

);

setState(() {

_isRecording = true;

});

}

Future _stopRecording() async {

if (!_recorderInitialized) return;

await _recorder!.stopRecorder();

setState(() {

_isRecording = false;

});

print('Recorded file path: ${_recordedFile!.path}');

}

Future _playRecording() async {

if (!_playerInitialized || _recordedFile == null) return;

await _player!.startPlayer(

fromURI: _recordedFile!.path,

codec: _codec,

whenFinished: () {

setState(() {

_isPlaying = false;

});

},

);

setState(() {

_isPlaying = true;

});

}

Future _stopPlaying() async {

if (!_playerInitialized) return;

await _player!.stopPlayer();

setState(() {

_isPlaying = false;

});

}

void _onComplete() {

Navigator.of(context).pop(_recordedFile?.path);

}

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

title: const Text('音声入力'),

),

body: Padding(

padding: const EdgeInsets.all(16.0),

child: Column(

children: [

Row(

mainAxisAlignment: MainAxisAlignment.center,

children: [

ElevatedButton(

onPressed: _isRecording ? null : _startRecording,

child: const Text('録音開始'),

),

const SizedBox(width: 16),

ElevatedButton(

onPressed: _isRecording ? _stopRecording : null,

child: const Text('録音停止'),

),

],

),

const SizedBox(height: 16),

Row(

mainAxisAlignment: MainAxisAlignment.center,

children: [

ElevatedButton(

onPressed: _isPlaying ? _stopPlaying : _playRecording,

child: Text(_isPlaying ? '再生停止' : '再生'),

),

],

),

const SizedBox(height: 16),

ElevatedButton(

onPressed: _recordedFile == null ? null : _onComplete,

child: const Text('完了'),

),

if (_errorText != null)

Padding(

padding: const EdgeInsets.all(8.0),

child: Text(

_errorText!,

style: const TextStyle(color: Colors.red),

),

),

],

),

),

);

}

}

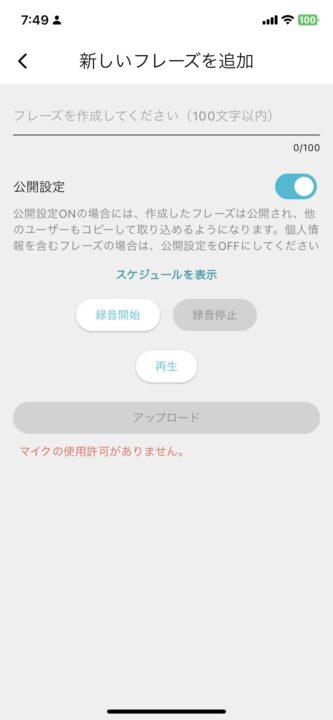

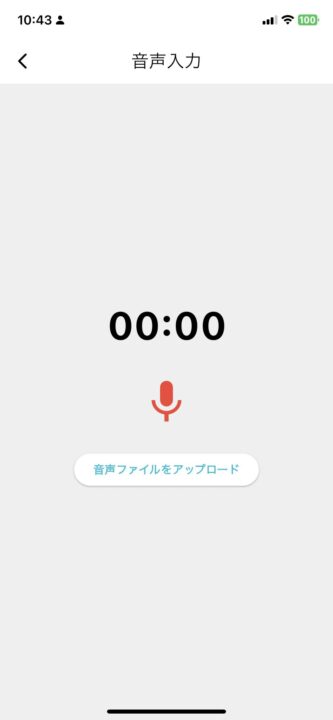

Changed UI to be like iOS voice memo

The current UI is too simplistic, so I would like to change it to a UI like the iPhone voice memo.

- Recording button design: large red round button placed in the center.

- Time Label: Text to indicate recording time or playback time.

- Playback button: Button to play back recorded audio.

- Waveform display: Real-time display of the audio waveform during recording.

UIUX for voice input

- When the screen is opened, only the record button (microphone icon) is displayed.

- When recording is started, the button switches to the Stop Recording button.

- When recording is stopped, the playback button appears and switches to the stop button during playback.

- When recording or playback is complete, the Done button appears.

import 'dart:async';

import 'dart:io';

import 'package:audio_session/audio_session.dart';

import 'package:flutter/material.dart';

import 'package:flutter_sound/flutter_sound.dart';

import 'package:flutter_sound_platform_interface/flutter_sound_recorder_platform_interface.dart';

import 'package:path_provider/path_provider.dart';

import 'package:permission_handler/permission_handler.dart';

class RecordVoiceScreen extends StatefulWidget {

@override

_RecordVoiceScreenState createState() => _RecordVoiceScreenState();

}

class _RecordVoiceScreenState extends State {

FlutterSoundRecorder? _recorder;

FlutterSoundPlayer? _player;

bool _isRecording = false;

bool _isPlaying = false;

bool _recorderInitialized = false;

bool _playerInitialized = false;

File? _recordedFile;

String? _errorText;

final Codec _codec = Codec.aacMP4;

Timer? _timer;

Duration _duration = Duration.zero;

@override

void initState() {

super.initState();

_initializeRecorder();

_initializePlayer();

}

@override

void dispose() {

_timer?.cancel();

_recorder?.closeRecorder();

_player?.closePlayer();

super.dispose();

}

Future _initializeRecorder() async {

_recorder = FlutterSoundRecorder();

var status = await Permission.microphone.request();

if (status != PermissionStatus.granted) {

setState(() {

_errorText = "マイクの使用許可がありません。";

});

throw RecordingPermissionException("Microphone permission not granted");

}

try {

await _recorder!.openRecorder();

final session = await AudioSession.instance;

await session.configure(AudioSessionConfiguration(

avAudioSessionCategory: AVAudioSessionCategory.playAndRecord,

avAudioSessionCategoryOptions:

AVAudioSessionCategoryOptions.allowBluetooth |

AVAudioSessionCategoryOptions.defaultToSpeaker,

avAudioSessionMode: AVAudioSessionMode.spokenAudio,

avAudioSessionRouteSharingPolicy:

AVAudioSessionRouteSharingPolicy.defaultPolicy,

avAudioSessionSetActiveOptions: AVAudioSessionSetActiveOptions.none,

androidAudioAttributes: const AndroidAudioAttributes(

contentType: AndroidAudioContentType.speech,

flags: AndroidAudioFlags.none,

usage: AndroidAudioUsage.voiceCommunication,

),

androidAudioFocusGainType: AndroidAudioFocusGainType.gain,

androidWillPauseWhenDucked: true,

));

setState(() {

_recorderInitialized = true;

});

} catch (e) {

setState(() {

_errorText = "レコーダーの初期化に失敗しました: $e";

});

}

}

Future _initializePlayer() async {

_player = FlutterSoundPlayer();

try {

await _player!.openPlayer();

setState(() {

_playerInitialized = true;

});

} catch (e) {

setState(() {

_errorText = "プレイヤーの初期化に失敗しました: $e";

});

}

}

Future _startRecording() async {

if (!_recorderInitialized) return;

final directory = await getTemporaryDirectory();

_recordedFile = File('${directory.path}/recording.m4a');

await _recorder!.startRecorder(

toFile: _recordedFile!.path,

codec: _codec,

audioSource: AudioSource.microphone,

);

setState(() {

_isRecording = true;

_duration = Duration.zero;

});

_timer = Timer.periodic(Duration(seconds: 1), (timer) {

setState(() {

_duration += Duration(seconds: 1);

});

});

}

Future _stopRecording() async {

if (!_recorderInitialized) return;

await _recorder!.stopRecorder();

_timer?.cancel();

setState(() {

_isRecording = false;

});

print('Recorded file path: ${_recordedFile!.path}');

}

Future _playRecording() async {

if (!_playerInitialized || _recordedFile == null) return;

await _player!.startPlayer(

fromURI: _recordedFile!.path,

codec: _codec,

whenFinished: () {

setState(() {

_isPlaying = false;

});

},

);

setState(() {

_isPlaying = true;

});

}

Future _stopPlaying() async {

if (!_playerInitialized) return;

await _player!.stopPlayer();

setState(() {

_isPlaying = false;

});

}

void _onComplete() {

Navigator.of(context).pop(_recordedFile?.path);

}

String _formatDuration(Duration duration) {

String twoDigits(int n) => n.toString().padLeft(2, "0");

String twoDigitMinutes = twoDigits(duration.inMinutes.remainder(60));

String twoDigitSeconds = twoDigits(duration.inSeconds.remainder(60));

return "$twoDigitMinutes:$twoDigitSeconds";

}

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

title: const Text('音声入力'),

),

body: Padding(

padding: const EdgeInsets.all(16.0),

child: Column(

mainAxisAlignment: MainAxisAlignment.center,

children: [

Text(

_formatDuration(_duration),

style: TextStyle(fontSize: 48.0, fontWeight: FontWeight.bold),

),

const SizedBox(height: 20),

Row(

mainAxisAlignment: MainAxisAlignment.center,

children: [

if (!_isPlaying)

IconButton(

icon: Icon(_isRecording ? Icons.stop : Icons.mic),

iconSize: 64.0,

onPressed: _isRecording ? _stopRecording : _startRecording,

color: Colors.red,

),

if (!_isRecording && _recordedFile != null)

IconButton(

icon: Icon(_isPlaying ? Icons.stop : Icons.play_arrow),

iconSize: 64.0,

onPressed: _isPlaying ? _stopPlaying : _playRecording,

color: Colors.green,

),

],

),

const SizedBox(height: 20),

if (_recordedFile != null && !_isRecording && !_isPlaying)

ElevatedButton(

onPressed: _onComplete,

child: const Text('完了'),

),

if (_errorText != null)

Padding(

padding: const EdgeInsets.all(8.0),

child: Text(

_errorText!,

style: const TextStyle(color: Colors.red),

),

),

],

),

),

);

}

}The following voice recording playback screen was displayed.

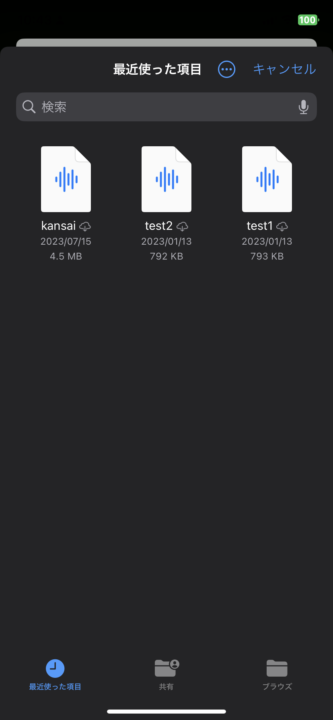

Supports audio file upload

In fact, we need to be able to support audio file uploads, as we believe there is a need to upload existing audio files as well as record new audio.

Addition of file upload function

Use the file_picker package to allow users to select audio files.

Limit the extensions allowed in allowedExtensions ( mp3, wav, m4a, aac ).

UI fix: add upload button to allow users to select and upload files.

Here is the code with the audio file upload function added.

import 'dart:async';

import 'dart:io';

import 'package:audio_session/audio_session.dart';

import 'package:clocky_app/widgets/buttons.dart';

import 'package:file_picker/file_picker.dart';

import 'package:flutter/material.dart';

import 'package:flutter_sound/flutter_sound.dart';

import 'package:flutter_sound_platform_interface/flutter_sound_recorder_platform_interface.dart';

import 'package:path_provider/path_provider.dart';

import 'package:permission_handler/permission_handler.dart';

class RecordVoiceScreen extends StatefulWidget {

@override

_RecordVoiceScreenState createState() => _RecordVoiceScreenState();

}

class _RecordVoiceScreenState extends State {

FlutterSoundRecorder? _recorder;

FlutterSoundPlayer? _player;

bool _isRecording = false;

bool _isPlaying = false;

bool _recorderInitialized = false;

bool _playerInitialized = false;

File? _recordedFile;

String? _errorText;

final Codec _codec = Codec.aacMP4;

Timer? _timer;

Duration _duration = Duration.zero;

@override

void initState() {

super.initState();

_initializeRecorder();

_initializePlayer();

}

@override

void dispose() {

_timer?.cancel();

_recorder?.closeRecorder();

_player?.closePlayer();

super.dispose();

}

Future _initializeRecorder() async {

_recorder = FlutterSoundRecorder();

var status = await Permission.microphone.request();

if (status != PermissionStatus.granted) {

setState(() {

_errorText = "マイクの使用許可がありません。";

});

throw RecordingPermissionException("Microphone permission not granted");

}

try {

await _recorder!.openRecorder();

final session = await AudioSession.instance;

await session.configure(AudioSessionConfiguration(

avAudioSessionCategory: AVAudioSessionCategory.playAndRecord,

avAudioSessionCategoryOptions:

AVAudioSessionCategoryOptions.allowBluetooth |

AVAudioSessionCategoryOptions.defaultToSpeaker,

avAudioSessionMode: AVAudioSessionMode.spokenAudio,

avAudioSessionRouteSharingPolicy:

AVAudioSessionRouteSharingPolicy.defaultPolicy,

avAudioSessionSetActiveOptions: AVAudioSessionSetActiveOptions.none,

androidAudioAttributes: const AndroidAudioAttributes(

contentType: AndroidAudioContentType.speech,

flags: AndroidAudioFlags.none,

usage: AndroidAudioUsage.voiceCommunication,

),

androidAudioFocusGainType: AndroidAudioFocusGainType.gain,

androidWillPauseWhenDucked: true,

));

setState(() {

_recorderInitialized = true;

});

} catch (e) {

setState(() {

_errorText = "レコーダーの初期化に失敗しました: $e";

});

}

}

Future _initializePlayer() async {

_player = FlutterSoundPlayer();

try {

await _player!.openPlayer();

setState(() {

_playerInitialized = true;

});

} catch (e) {

setState(() {

_errorText = "プレイヤーの初期化に失敗しました: $e";

});

}

}

Future _startRecording() async {

if (!_recorderInitialized) return;

final directory = await getTemporaryDirectory();

_recordedFile = File('${directory.path}/recording.m4a');

await _recorder!.startRecorder(

toFile: _recordedFile!.path,

codec: _codec,

audioSource: AudioSource.microphone,

);

setState(() {

_isRecording = true;

_duration = Duration.zero;

});

_timer = Timer.periodic(const Duration(seconds: 1), (timer) {

setState(() {

_duration += const Duration(seconds: 1);

});

});

}

Future _stopRecording() async {

if (!_recorderInitialized) return;

await _recorder!.stopRecorder();

_timer?.cancel();

setState(() {

_isRecording = false;

});

print('Recorded file path: ${_recordedFile!.path}');

}

Future _playRecording() async {

if (!_playerInitialized || _recordedFile == null) return;

await _player!.startPlayer(

fromURI: _recordedFile!.path,

codec: _codec,

whenFinished: () {

setState(() {

_isPlaying = false;

});

},

);

setState(() {

_isPlaying = true;

});

}

Future _stopPlaying() async {

if (!_playerInitialized) return;

await _player!.stopPlayer();

setState(() {

_isPlaying = false;

});

}

Future _uploadAudioFile() async {

FilePickerResult? result = await FilePicker.platform.pickFiles(

type: FileType.custom,

allowedExtensions: ['mp3', 'wav', 'm4a', 'aac'],

);

if (result != null) {

setState(() {

_recordedFile = File(result.files.single.path!);

_errorText = null;

});

print('Uploaded file path: ${_recordedFile!.path}');

} else {

setState(() {

_errorText = "ファイル選択がキャンセルされました。";

});

}

}

void _onComplete() {

Navigator.of(context).pop(_recordedFile?.path);

}

String _formatDuration(Duration duration) {

String twoDigits(int n) => n.toString().padLeft(2, "0");

String twoDigitMinutes = twoDigits(duration.inMinutes.remainder(60));

String twoDigitSeconds = twoDigits(duration.inSeconds.remainder(60));

return "$twoDigitMinutes:$twoDigitSeconds";

}

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

title: const Text('音声入力'),

),

body: Padding(

padding: const EdgeInsets.all(16.0),

child: Column(

mainAxisAlignment: MainAxisAlignment.center,

children: [

Text(

_formatDuration(_duration),

style:

const TextStyle(fontSize: 48.0, fontWeight: FontWeight.bold),

),

const SizedBox(height: 20),

Row(

mainAxisAlignment: MainAxisAlignment.center,

children: [

if (!_isPlaying)

IconButton(

icon: Icon(_isRecording ? Icons.stop : Icons.mic),

iconSize: 64.0,

onPressed: _isRecording ? _stopRecording : _startRecording,

color: Colors.red,

),

if (!_isRecording && _recordedFile != null)

IconButton(

icon: Icon(_isPlaying ? Icons.stop : Icons.play_arrow),

iconSize: 64.0,

onPressed: _isPlaying ? _stopPlaying : _playRecording,

color: Colors.green,

),

],

),

const SizedBox(height: 20),

ElevatedButton(

onPressed: _uploadAudioFile,

child: const Text(

'音声ファイルをアップロード',

style: TextStyle(

fontWeight: FontWeight.bold,

),

),

),

const SizedBox(height: 20),

if (_recordedFile != null && !_isRecording && !_isPlaying)

AppButton(

text: '完了',

onPressed: _onComplete,

),

if (_errorText != null)

Padding(

padding: const EdgeInsets.all(8.0),

child: Text(

_errorText!,

style: const TextStyle(color: Colors.red),

),

),

],

),

),

);

}

}

Users can complete (save) only one audio file (recorded or uploaded) on one screen. In other words, users can choose to complete either a recorded or an existing audio file, but not both at the same time.

summary

The flutter_sound and file_picker packages could be used to allow users to play audio recordings and upload audio files with the flutter application.

Next, we need to create a process to upload the audio to the server side (AWS S3) through the file path we are getting when the voice input is completed. To be continued in the next issue.